Typography Matching

Project Overview

Scholars interested in the materiality of texts frequently interrogate how their objects of study were developed: for instance, what ink and paper were utilized in printing or what typefaces and images might have been used and reused across time.

These bibliographical methods require careful attention to detail, made more complicated by each text’s individual quirks and the possibility of various printings or editions being housed at libraries and archives across the globe.

Data science tools, such as DataLab’s Archive-Vision (arch-v), can assist book historians and bibliographers in their examinations of these materials by identifying recurring patterns across a set of images.

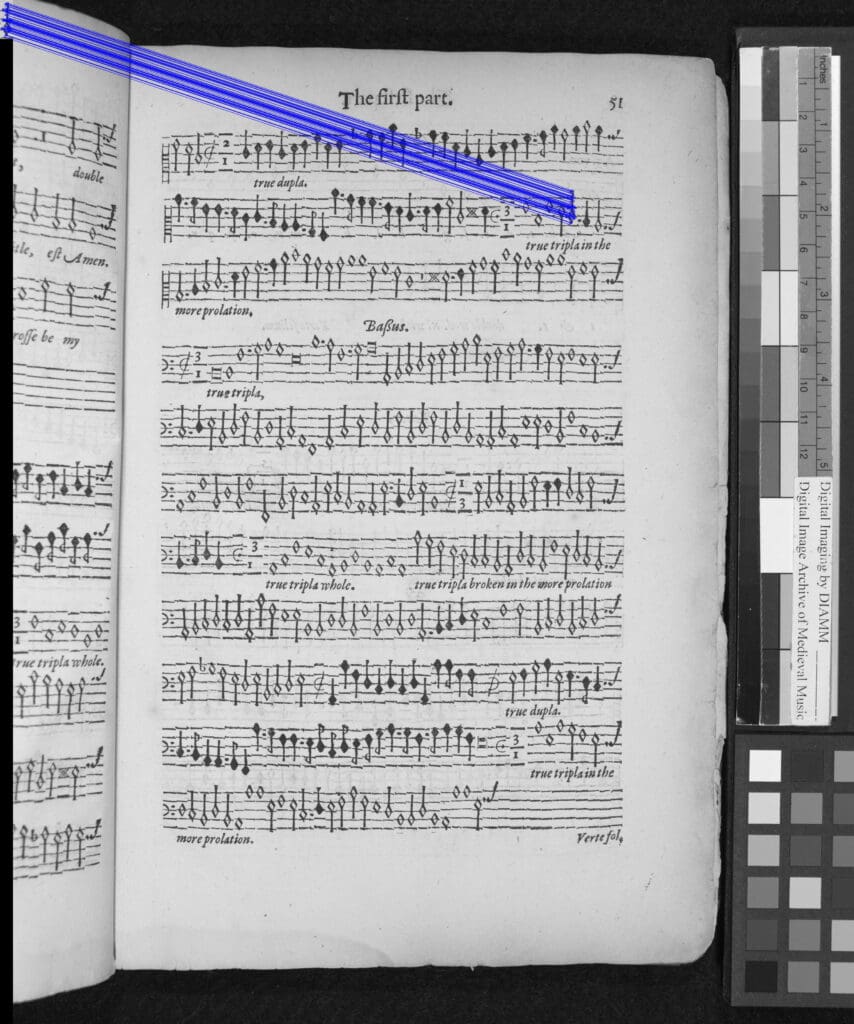

Partnering with Professor Emeritus Jessie Ann Owens from the Department of Music, DataLab’s Arthur Koehl modified arch-v to explore the feasibility of matching particular damaged types within an archive of scanned images from an early printing of Thomas Morley’s 16th century An Introduction to Music.

Utilizing a seed image of a damaged type, arch-v can be trained to identify similar instantiations across the set of images. Template matching successfully identified a number of patterns in damaged types across the book, though some modifications may be necessary to reduce the number of matches and ensure exact accuracy.

Overall, the project proves that computational image recognition technologies can successfully produce such matches and provides potential avenues for further expansion of arch-v’s capabilities to advance a variety of text-focused avenues of research.

DataLab Contact

- Carl Stahmer and Arthur Koehl (technical lead)